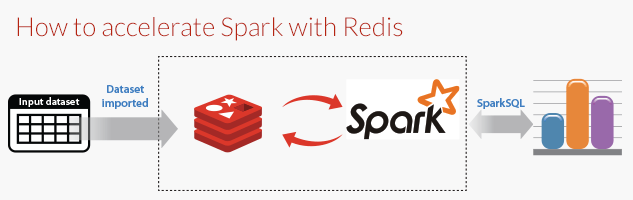

First will cover basic introduction of Apache spark & Redis, then we will see how we can use Redis in spark. Here we used Scala for writing code in spark.

Spark :

Apache Spark is an open-source distributed general-purpose cluster-computing framework. It is a fast, in-memory data processing engine with lots of APIs to allow data workers to efficiently execute streaming, machine learning or SQL workloads.

Redis :

Redis is an open source , in-memory data structure store, used as a database, cache and message broker. Redis has built-in replication, Lua scripting , LRU eviction, transaction and different level of on-disk persistence , and provide high availability via Redis Sentinel & automatic partitioning with Redis Cluster.

Spark-Redis library provide access to all Redis Data structures like String, List, Set & Hash from Spark RDD. It Also supports Reading & writing with RDD & Dataframe.

To get Spark-Redis Dependency using Maven add below code in pom.xml file.

<dependencies>

<dependency>

<groupId>com.redislabs</groupId>

<artifactId>spark-redis</artifactId>

<version>2.4.0</version>

</dependency>

</dependencies>

For Executing spark application with Redis, we need to pass Spark-Redis jar ( https://jar-download.com/?search_box=spark-redis ) & Redis Credential with spark-shell command. By default Redis use localhost:6379 without a password.

spark-shell --jars <path_to_redis_spark_jar>/spark-redis_<version>.jar --conf "spark.redis.host=localhost" --conf "spark.redis.port=6379"

Then you can import Redis Library in Spark-shell :

scala> import com.redislabs.provider.redis._

scala> import org.apache.spark.sql.SparkSession

scala> val spark = SparkSession.builder()

.appName("SparkRedis")

.master("local[*]")

.config("spark.redis.host", "localhost")

.config("spark.redis.port", "6379")

.getOrCreate()

Here You can either pass spark.redis.host & spark.redis.port in Spark-shell command or with SparkSession Object like above.

Reading Data From Redis :

We have inserted Few Keys in Redis, Below are Few ways through which we can fetch those key-value.

· Using fromRedisKV we can fetch all String value matching with key. Key can be pattern also.

In above example, we are getting all the key-value starting with keys alph*.

Like above There are multiple function to fetch different Type of value. Few of them are below :

- val hashTypeRDD = sc.fromRedisHash(“keyPattern *”) //For Fetching Hash Type of value.

- val listTypeRDD = sc.fromRedisList(“keyPattern*”) //For Fetching List of value.

- val setTypeRDD = sc.fromRedisSet(“keyPattern*”) //For Fetching Set of Value.

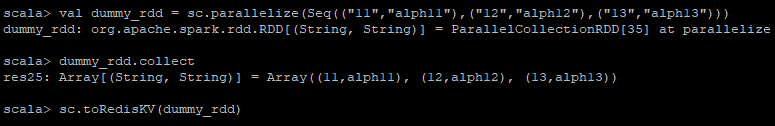

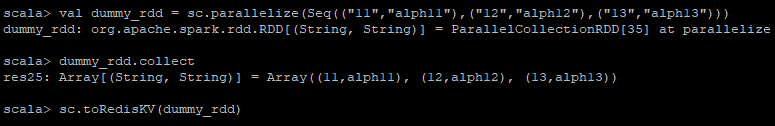

Writing Data To Redis :

For writing data to redis use toRedisKV . Here we have created RDD[String,String], Then write it in Redis.

On Redis Shell (To start redis, first start redis-server then start redis-cli from redis src folder/set redis path , to install redis have a look https://tecadmin.net/install-redis-ubuntu/ ):

Like above There are multiple function to write different Type of data in Redis . Few of them are below :

- sc.toRedisHASH(hashRDD, hashName) //For storing data in Redis Hash.

- sc.toRedisLIST(listRDD, listName) //For storing data in Redis List.

- sc.toRedisSET(setRDD, setName) //For storing data in Redis Set.

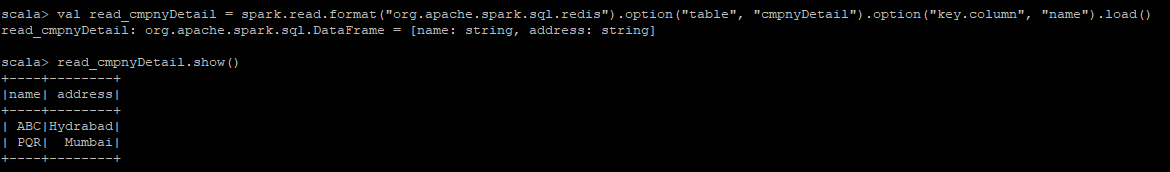

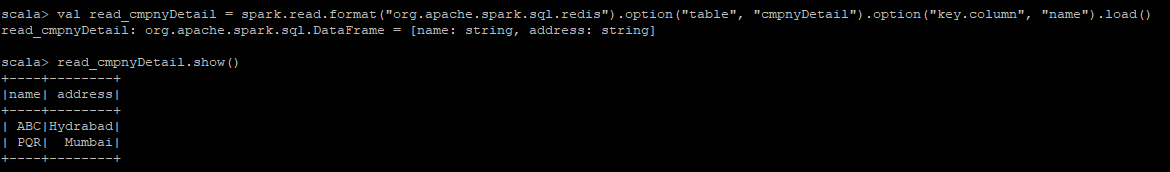

Writing Spark Dataframe in Redis :

For writing spark Dataframe in redis, First we will create DataFrame in Spark.

Then Write above Dataframe in Redis,

We can check this data in Redis, As this data is hash so fetching it using HGETALL

Writing Spark Dataframe in Redis :

Then we will read cmpnyDetail table that we have created in Redis earlier in spark using,

While reading & writing we can specify below options ,

- Table : Name of the redis table for reading & writing Data.

- number : Number of partition for reading Dataframe.

- column : Specify unique column used as Redis Key. By Defult key is auto-generated.

- ttl : Data time to live, Data doesn’t expire if ttl is less than 1.

- schema : infer schema from random row, all columns will have String type

Structured Streaming :

Spark-redis support Redis Stream data Structure as a source for Structured Streaming.

The following example reads data from a Redis Stream empStream that has two fields empId and empName:

val employee = spark.readStream

.format("org.apache.spark.sql.redis")

.option("stream.keys", "empStream ")

.schema(StructType(Array(

StructField("empId", StringType),

StructField("empName", FloatType)

))).load()

val query = employee.writeStream

.format("console")

.start()

query.awaitTermination()